Performance Evaluation Methodology for Image Mosaicing Algorithms

Topic Description

Image mosaicing is an active field in the computer vision community and several algorithms have been conceived during the last years. On the contrary, little work has been done on the characterization of their performance and a thorough and fair comparison of the proposals does not exists thus far. Moreover, existing surveys do not provide a unifying theoretical framework and simple enumerations of different approaches are likely to fail in yielding new insights.

This research lines aims at designing a complete and principled evaluation methodology for the comparison of the performance of image mosaicing algorithms. The proposal includes:

- data sets, comprising several realistic synthetic sequences of increasing complexity

- evaluation procedure, providing a fair and principled assessment of the performance

- performance metrics, simple and meaningful indicators of the perceived quality of the mosaic

- CVLab Mosaicing Evaluation , an internet site that allows researchers to evaluate and compare their mosaicing algorithms on a reference data set with ground truth.

Strictly tied with the methodology, a work concerning the classification and taxonomy of the algorithms is in progress. The effort is in tracing back every algorithms to a unified theoretical framework and to a finite set of design decisions. We are firmly convinced that a common framework and the dissection of the algorithms in discrete components will dramatically boost the comprehension and the enhancement of this computer vision topic.

Evaluation Methodology

As previously mentioned, this research line focus on the different aspects of a complete comparison methodology: data sets, evaluation procedure and performance metrics.

As regard with data sets, they have been collected by using a, so called, Virtual Camera (VC). VC is a software module that simulates the image formation process of today's imaging devices. All the parameters are fully customizable, from the pose and position of the observer to the sensor dimension, snapshot resolution, focal length, ... Once a scene is selected, snapshots are taken as they were framed by a real camera [1]. Moreover, the ground truth associated to the sequence is simply the scene just framed.

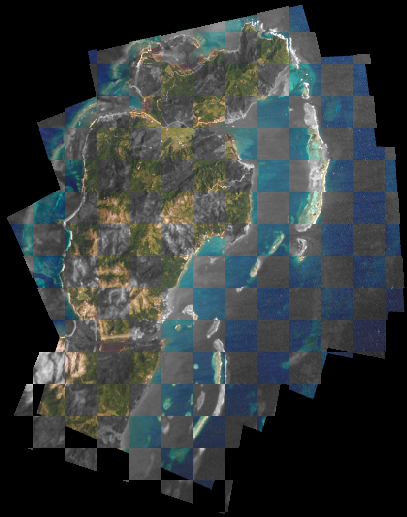

Besides data sets, the evaluation procedure is perhaps the most important part of a methodology. Each algorithm is evaluated based only on his output, namely the mosaic it creates. In order to provide an as fair as possible comparison we request that a specified frame of the sequence (i.e. the first one) to become an anchor. The anchor frame is needed to be placed at a certain position and orientation within the mosaic. Every other frames will be warped and blended according to the transformation inferred by the algorithm. Imposing the anchor allow the outcoming mosaic to be rendered in the same reference frame as the ground truth. When ground truth and mosaic are registered, the performance metrics, based on the comparison of corresponding pixels, become appropriate. Since commercial products do not permits end-user to set a anchor, a sophisticated procedure has been conceived to overcome this shortcoming, for further readings refer to [3].

Last but not least, the evaluation metrics. Currently we collect statistics regarding the SSD between the ground truth scene and the outcoming mosaic, together with few additional indicators such as the shortcoming or excess of pixels with respect to the ground truth. This approach is simple and straightforward. In the near future we plan to investigate on the selection of the most appropriate evaluation metrics (e.g. perceptive metrics).

Results

In these sections, some snapshots coming from two evaluation sequences, Pure Translation and Random Motion, are shown together with the respective ground truth. Both the sequence have been acquired using the Virtual Camera and ground truth transformations and camera parameters are known for each frames.

|

|

|

|

| Samples from sequence Pure Translation | Ground truth mosaic for sequence Pure Translation | ||

|

|

|

|

|

|

|

|

| Samples from sequence Random Motion | Ground truth mosaic for sequence Random Motion | ||

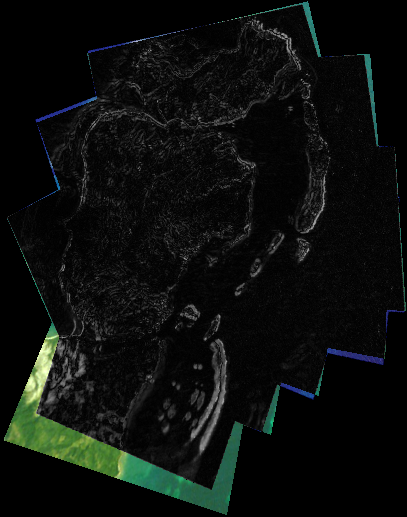

Some of the performance indicators obtained by using the evaluation methodology applied to the algorithm SeqRT-Mosaic[2].

|

|

| SSDMap for algorithm [2] on Pure Translation | Checkerboard for algorithm [2] on Pure Translation |

|

|

| SSDMap for algorithm [2] on Random Motion | Checkerboard for algorithm [2] on Random Motion |

For an exhaustive review of the experimental results please visit CVLab Mosaicing Evaluation .

Research Directions

Our current research addresses the following major issues.

- Virtual Camera: improvements of the synthetic image formation process to obtain more realistic views. VC will model sensor noise, optical distortion, photometric distortion and it will also features independently moving objects as additional disturbance factors.

- Evaluation methodology: as virtual camera improves capabilities, the evaluation methodology will enrich with brand new challenging sequences and performance metrics. Interest is in perceptive quality metrics and statistical indicators describing error distribution instead of conventional parameters such as moments

- Informed survey: an exhaustive classification and principled framework that will hopefully collect all the mosaicing algorithms under the same hood is in progress. For a first issue of this work plase refer to [2]

References

| [1] | P. Azzari and L. Di Stefano, Performance Evaluation Methodology for Image Mosaicing Algorithms , CV-Lab Technical Report TR-2007-10,University of Bologna, October 2007. |

| [2] | P. Azzari, General purpose real-time image mosaicing, appeared in the poster session of ICVSS, July 2007. |