Multi-View Information Fusion

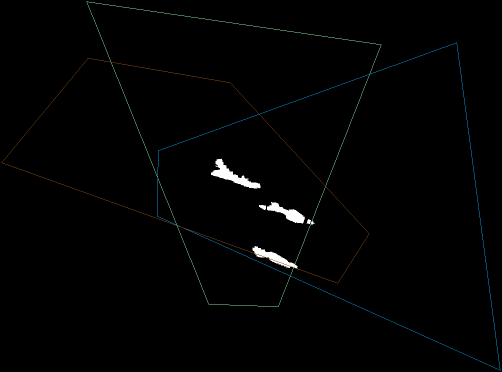

The possibility of having at disposal multiple synchronized video sequences of a scene allows for exploiting more information in order to deal effectively with disturbance factors. In cooperation with CVLAB at EPFL – Lausanne, we deviced a multi-view information fusion paradigm strongly based on the results obtained for single-view change detection. In particular, the task to filter-out appearance changes due to camera noise, dynamic adjustments of camera parameters and global scene illumination changes is assigned to a robust single-view change detector run independently in each view. The multi-view constraint is then applied by fusing the attained single-view change masks into a common virtual top-view. This allows to identify and filter-out appearance changes corresponding to scene points lying on the ground plane, thus detecting and removing false changes due, for instance, to shadows cast by moving objects or light spots hitting the ground surface.

|

|

|

| View 1: current frame | View 2: current frame | View 3: current frame |

|

|

|

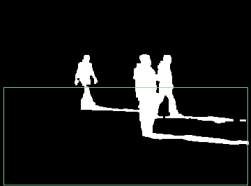

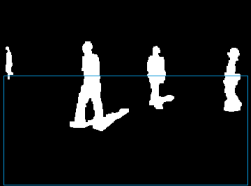

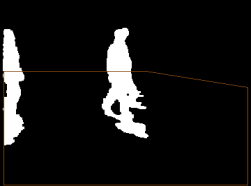

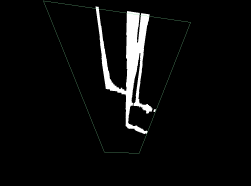

| View 1: single view change mask | View 2: single view change mask | View 3: single view change mask |

|

|

|

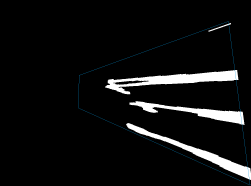

| View 1: projected change mask | View 2: projected change mask | View 3: projected change mask |

|

||

| Intersection of the projected single view change masks | ||

|

|

|

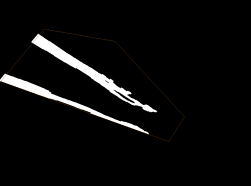

| View 1: final change mask | View 2: final change mask | View 3: final change mask |

References

| [1] | A. Lanza, L. Di Stefano, J. Berclaz, F. Fleuret, and P. Fua, Robust multi-view change detection, in Proc. British Machine Vision Conference (BMVC’07), Sep. 2007. |